Infinion

Well-known member

Time snip 1

Time snip 2

prof John Kelly goes over the battery construction and cell measurement/balancing in the Chevy Volt (similar architecture to Spark EV).

The BECM (Battery Energy Control Module) monitors the state of the 96s2p (96 series and 2 parallel) cells in the 2015/2016 Spark EV. The 2014 has a slightly different cell configuration, 112S3P (112 Series 3 Parallel).

The only issue with this BECM design is its cell balancing strategy. When the Spark EV fully charges to 100%, the BECM will top-balance all the cells by discharging them across up to 96 groups of resistors until the lowest cells reach the average cell voltage. While charging off L1/L2 EVSEs, or DCFC, the power tapers to something below the ballpark of 2-3kW. Dividing power by 96 or 112, each group must dissipate 3000 W / 96 groups = 31W of charging power.

Using the electrical power formula of Ohm's law [P=IV] where P is power, I is current in amps, and V is cell voltage and solving for current, I = P/V -> I = 31W/4.1V -> I = 7.6A of current for a 96 group balancer.

So we can infer that the BECM's balancing circuit is at least rated for around 8 amps of DC current across 96 / 112 groups of cells.

In other words, your EV is going into turtle mode early, and shutting down early because your weakest cells need to be protected, and restrict your depth of discharge.

Whenever your Spark EV top balances, The weak cells will throw away 31W / 8A of charging power so they don't become overcharged. Since the difference in capacity between our theoretical Spark EV is 167 Wh - 146 Wh = 21Wh, the car spends 40 minutes balancing on L2 and around 1h40m on L1.

The solution is to add an active bottom balancer that charges the weak cells siphon energy from the strong ones.

It would use the same balancing lines the BECM's top balancer uses during charging. In the video above, there are service connectors for battery testing that have pins that connect to each of the 16 cell terminals on top of each battery module.

In the service manual, the connectors I am referring to are X5 - X7, X9, X10, and X13. These are male connectors, and the matching female that mates with it is https://www.aptiv.com/en/solutions/connection-systems/catalog/item?language=en&id=15345477_en

Once the minimum cell imbalance is detected in a weak cell, a 16-cell active balancer would operate, transferring energy from stronger cells in the group at <30W per cell group while the Spark EV is in operation to compensate for the difference in capacity.

There would need to be 6 balancers per battery module. This would effectively maximize the usable capacity and depth of discharge of the pack, and extend the service life of the vehicle. A top balancer to protect cell group overcharging, and a bottom balancer to protect overdischarge that the HPCM2 previously achieved by reducing power and shutting the vehicle down early.

Here's one readymade solution for exactly this purpose

https://100balancestore.com/collect...tive-balancer-module-for-lithium-battery-pack

Time snip 2

prof John Kelly goes over the battery construction and cell measurement/balancing in the Chevy Volt (similar architecture to Spark EV).

What does the BMS do?

The BECM (Battery Energy Control Module) monitors the state of the 96s2p (96 series and 2 parallel) cells in the 2015/2016 Spark EV. The 2014 has a slightly different cell configuration, 112S3P (112 Series 3 Parallel).

How does it balance cells and how much power can it handle?

The only issue with this BECM design is its cell balancing strategy. When the Spark EV fully charges to 100%, the BECM will top-balance all the cells by discharging them across up to 96 groups of resistors until the lowest cells reach the average cell voltage. While charging off L1/L2 EVSEs, or DCFC, the power tapers to something below the ballpark of 2-3kW. Dividing power by 96 or 112, each group must dissipate 3000 W / 96 groups = 31W of charging power.

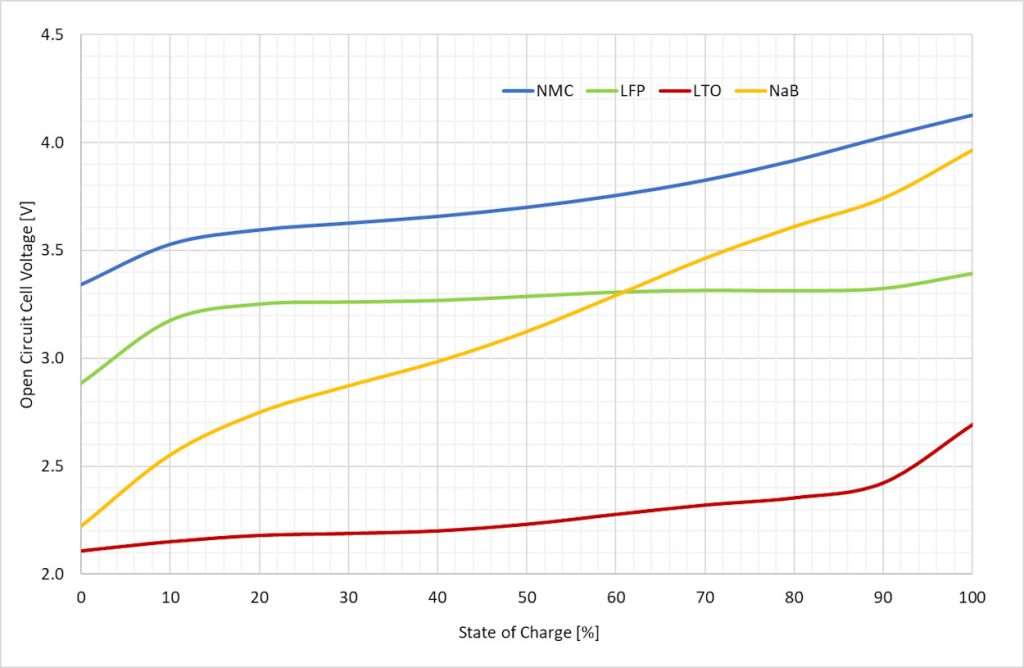

Using the electrical power formula of Ohm's law [P=IV] where P is power, I is current in amps, and V is cell voltage and solving for current, I = P/V -> I = 31W/4.1V -> I = 7.6A of current for a 96 group balancer.

So we can infer that the BECM's balancing circuit is at least rated for around 8 amps of DC current across 96 / 112 groups of cells.

What's the problem with weak cells?

Say you have a Spark EV that originally had 18.2 kWh of capacity with ~90% depth of discharge. After 10 years your capacity has dropped to around 14 kWh usable, or 22% degradation, with 0.146 kWh / 42 Amp-hours per cell group. However, when you drive to 90% depth of discharge, the car quickly shuts down with "propulsion power reduced". It turns out only two of your cells have degraded to 146 Wh and the rest are actually much healthier at, say, 167 Wh. However, the BECM detected the weak cells dropping below 3V and reduced your power for the entire battery. But it's not enough and the car shuts down after a only a few miles as the weak cells cross the 2.5V cutoff.In other words, your EV is going into turtle mode early, and shutting down early because your weakest cells need to be protected, and restrict your depth of discharge.

Whenever your Spark EV top balances, The weak cells will throw away 31W / 8A of charging power so they don't become overcharged. Since the difference in capacity between our theoretical Spark EV is 167 Wh - 146 Wh = 21Wh, the car spends 40 minutes balancing on L2 and around 1h40m on L1.

And So?

And so the BECM protects the weak cells from being overcharged, but your battery pack behaves like it has 96 weak cells with the smaller capacity, when you really have more energy stored that is unusable.

What's the solution?

The solution is to add an active bottom balancer that charges the weak cells siphon energy from the strong ones.How could it be done?

It would use the same balancing lines the BECM's top balancer uses during charging. In the video above, there are service connectors for battery testing that have pins that connect to each of the 16 cell terminals on top of each battery module.

In the service manual, the connectors I am referring to are X5 - X7, X9, X10, and X13. These are male connectors, and the matching female that mates with it is https://www.aptiv.com/en/solutions/connection-systems/catalog/item?language=en&id=15345477_en

Once the minimum cell imbalance is detected in a weak cell, a 16-cell active balancer would operate, transferring energy from stronger cells in the group at <30W per cell group while the Spark EV is in operation to compensate for the difference in capacity.

There would need to be 6 balancers per battery module. This would effectively maximize the usable capacity and depth of discharge of the pack, and extend the service life of the vehicle. A top balancer to protect cell group overcharging, and a bottom balancer to protect overdischarge that the HPCM2 previously achieved by reducing power and shutting the vehicle down early.

Here's one readymade solution for exactly this purpose

https://100balancestore.com/collect...tive-balancer-module-for-lithium-battery-pack